Toxicity - a Hugging Face Space by evaluate-measurement

Descrição

The toxicity measurement aims to quantify the toxicity of the input texts using a pretrained hate speech classification model.

Continuous LLM Monitoring - Observability to ensure Responsible AI

R] All about evaluating Large language models : r/MachineLearning

Efficacy and toxicity of particle radiotherapy in WHO grade II and grade III meningiomas: a systematic review in: Neurosurgical Focus Volume 46 Issue 6 (2019) Journals

Human Evaluation of Large Language Models: How Good is Hugging Face's BLOOM?

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

Descriptor-Free Deep Learning QSAR Model for the Fraction Unbound in Human Plasma

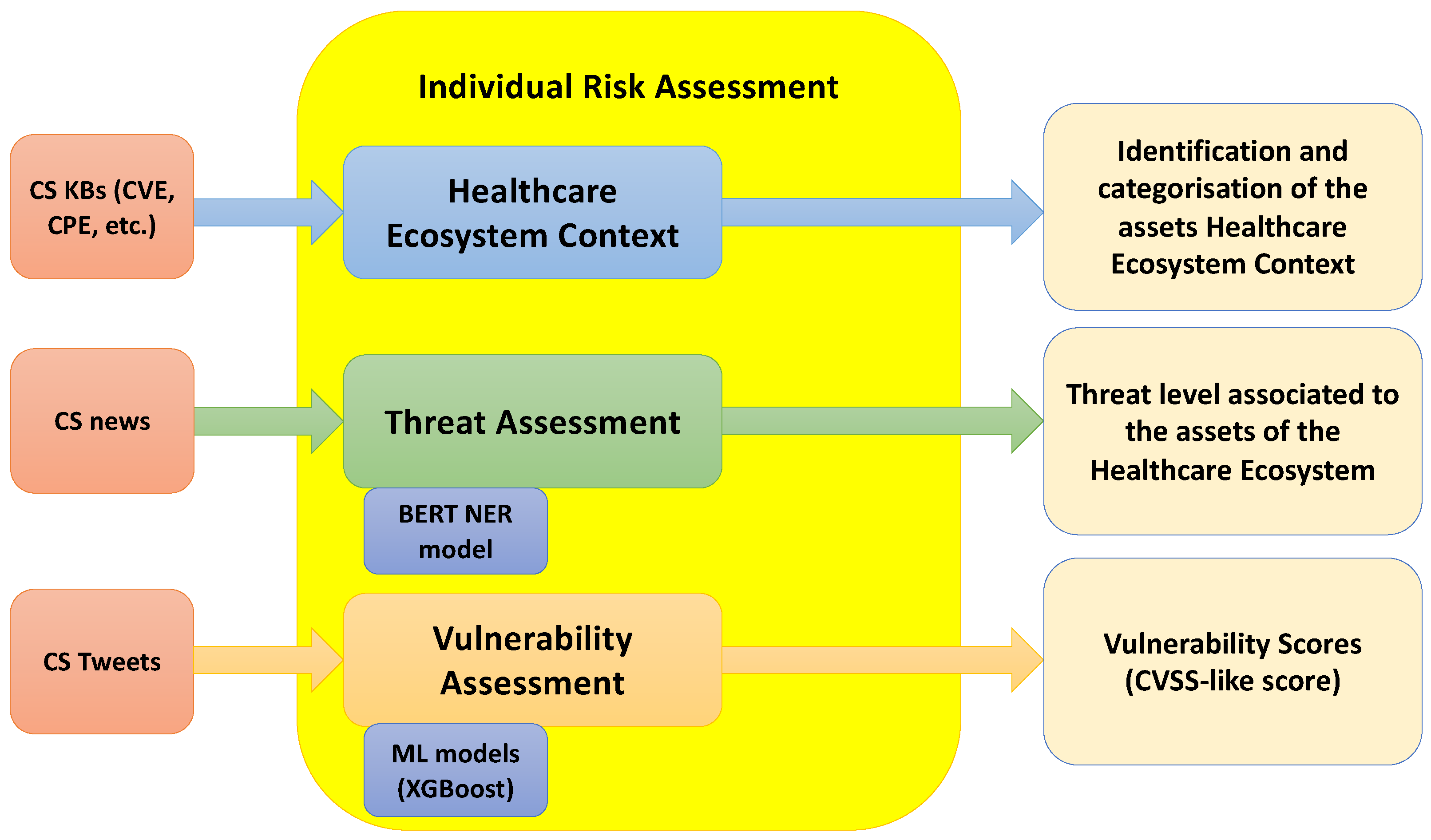

Sensors, Free Full-Text

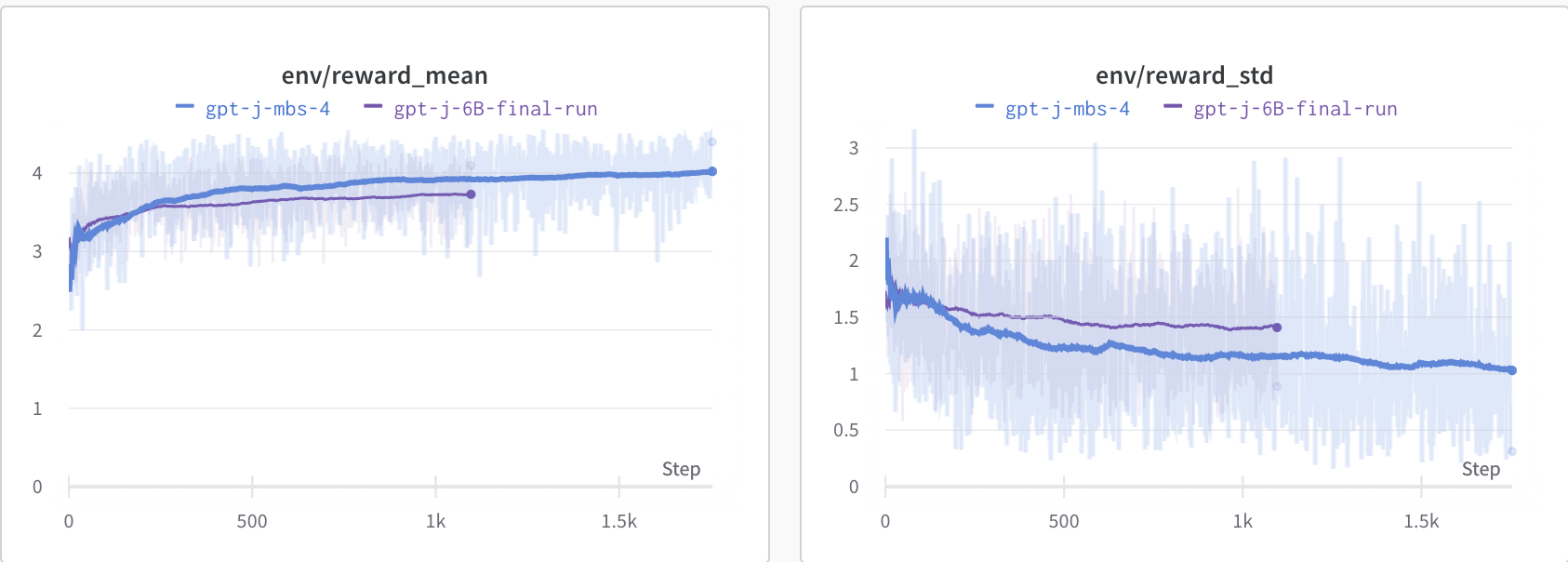

Detoxifying a Language Model using PPO

Pre-Trained Language Models and Their Applications - ScienceDirect

BLOOMChat: a New Open Multilingual Chat LLM

Modern drug discovery using ethnobotany: A large-scale cross-cultural analysis of traditional medicine reveals common therapeutic uses - ScienceDirect

evaluate-measurement (Evaluate Measurement)

AI Chatbots Got Big—and Their Ethical Red Flags Got Bigger

de

por adulto (o preço varia de acordo com o tamanho do grupo)