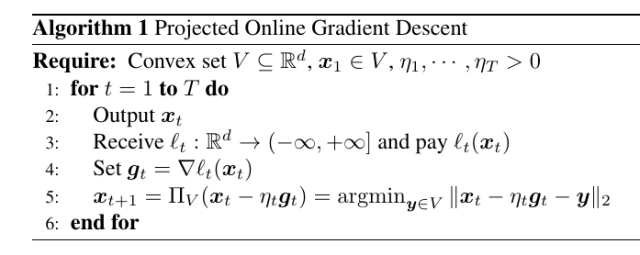

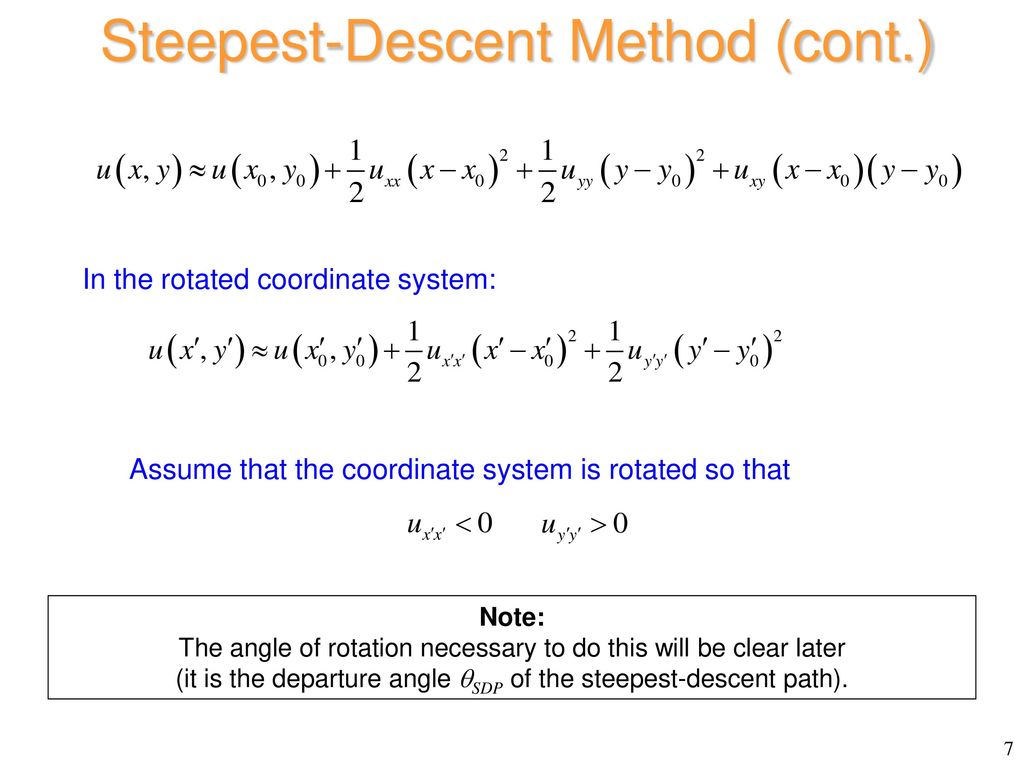

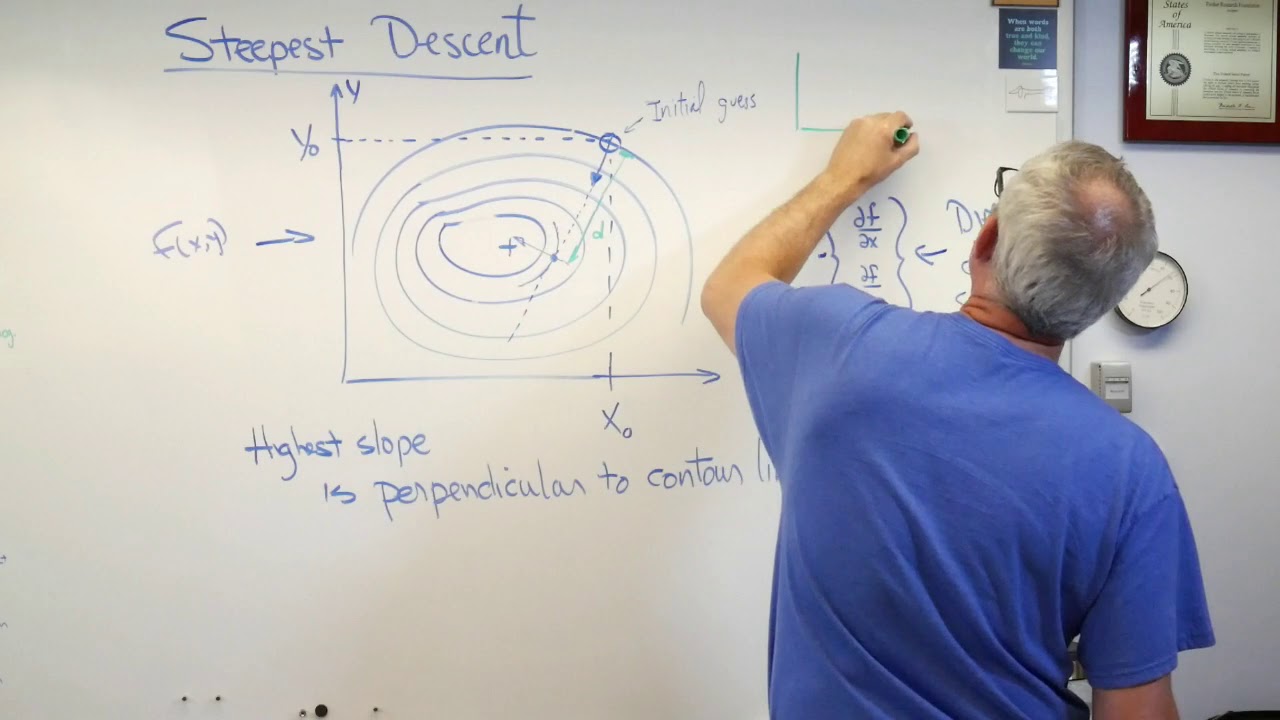

Applied Optimization - Steepest Descent

Descrição

Adam is an effective gradient descent algorithm for ODEs. a Using a

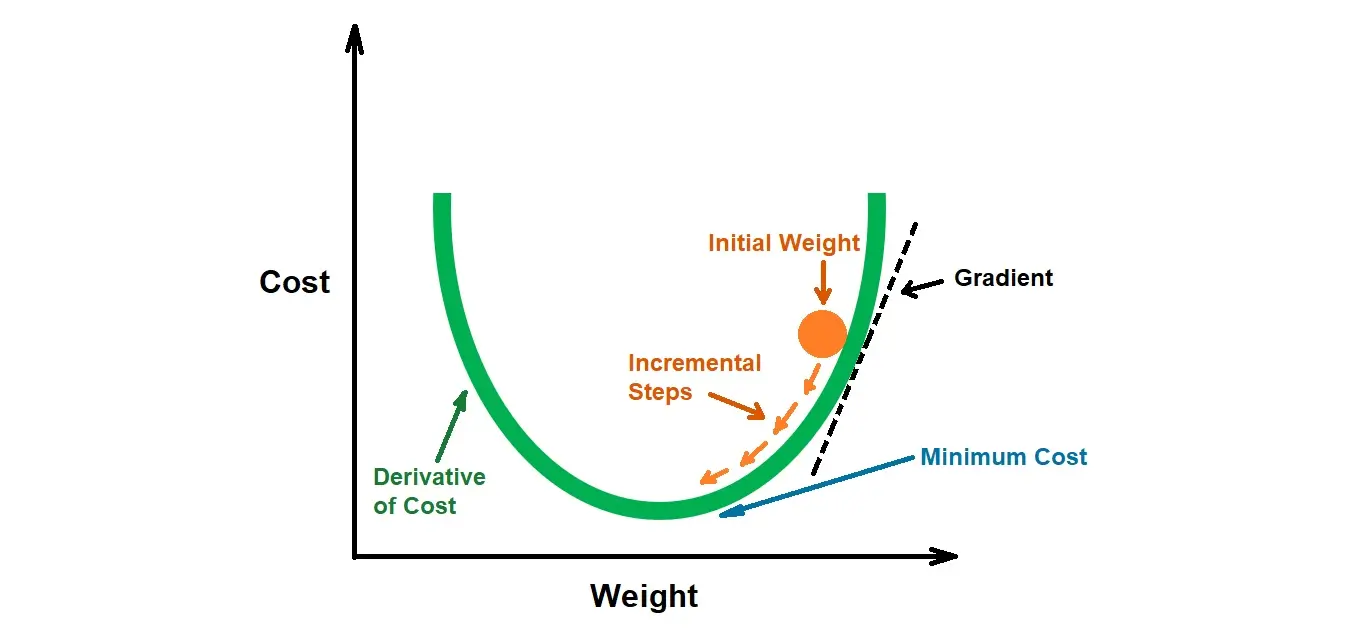

Gradient Descent — ML Glossary documentation

nonlinear optimization - Do we need steepest descent methods, when minimizing quadratic functions? - Mathematics Stack Exchange

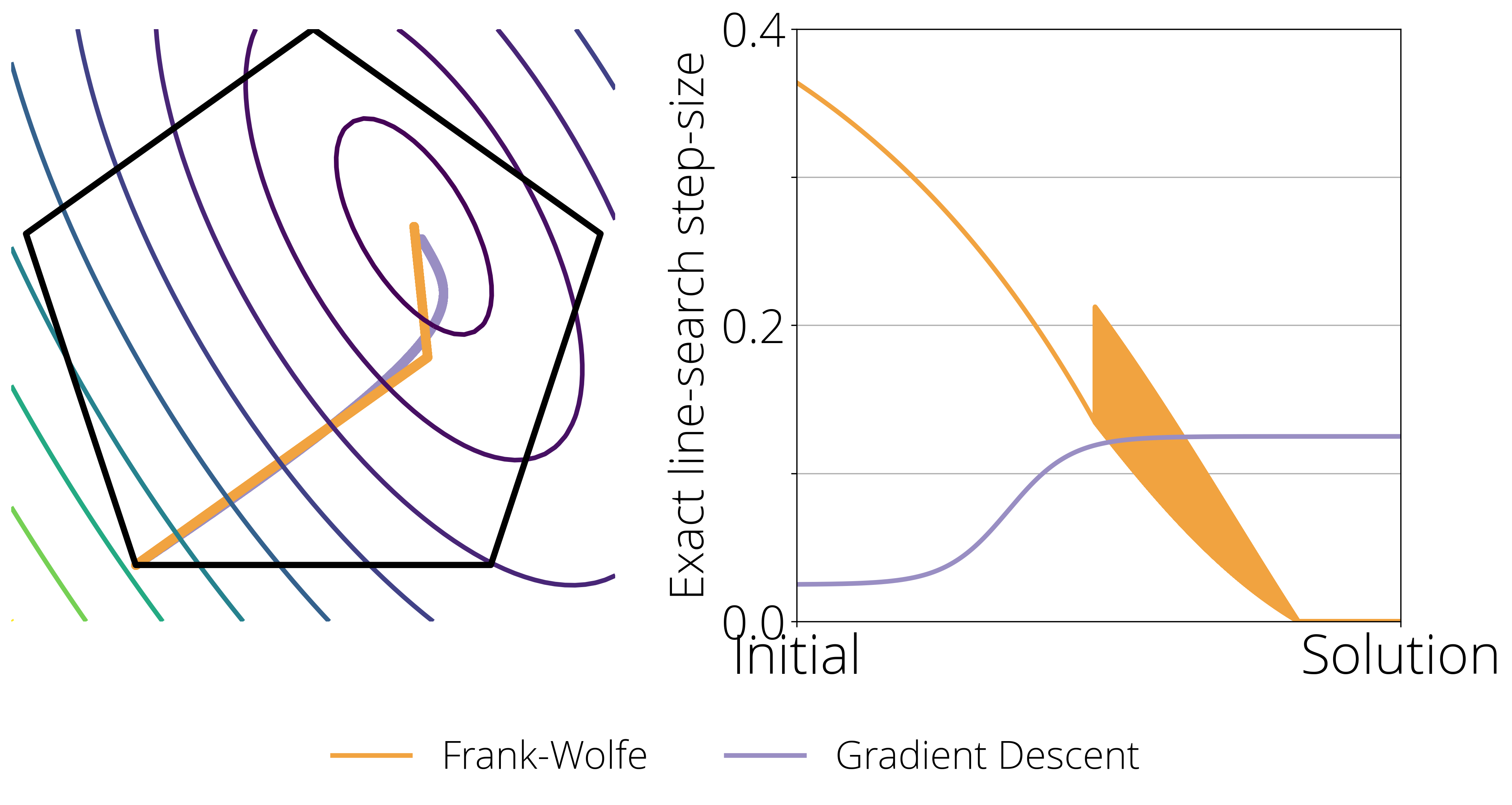

Risky Giant Steps Can Solve Optimization Problems Faster

Stochastic gradient descent for hybrid quantum-classical optimization – Quantum

Gradient Descent for Optimizing Machine Learning Models

Applied Optimization - Steepest Descent

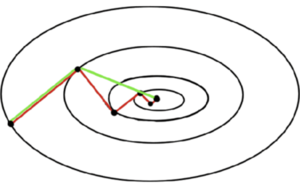

Steepest Descent - an overview

Notes on the Frank-Wolfe Algorithm, Part III: backtracking line-search

Stochastic Gradient Descent Algorithm With Python and NumPy – Real Python

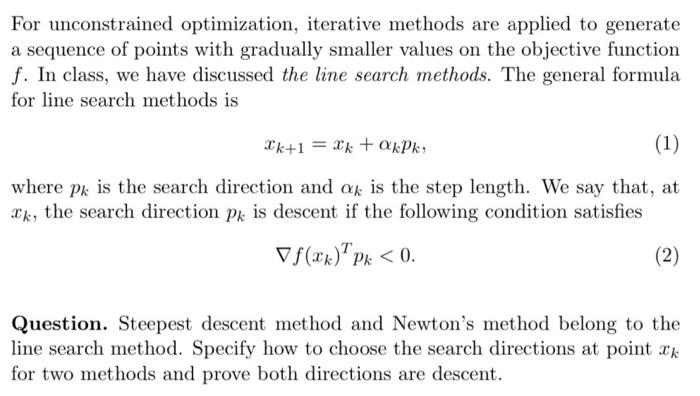

Solved For unconstrained optimization, iterative methods are

Mathematics, Free Full-Text

de

por adulto (o preço varia de acordo com o tamanho do grupo)